Recent Paper: Narrow or Wide? The Phenomenology of 750 GeV Diphotons

/So here’s my contribution to the diphoton anomaly feeding frenzy (paper here). As I spoke about here, in the early Run-II data from the LHC there was an announcement from ATLAS that they had seen something a bit unusual in their search for new physics in events with pairs of photons (thus: diphotons). When the two photons had an invariant mass near 750 GeV, ATLAS saw a few more events than background would predict. Here’s what that looks like.

This excess of events has a statistical significance of $3.6\sigma$, meaning we should see something like this happen by random chance about one out of every 3140 times. That’s pretty good, though no where near the particle physics gold standard of $5\sigma$ (1 in 1,744,000). However, you have to temper your expectations a bit, since we’d be equally excited about a fluctuation like this anywhere in the spectrum of invariant masses (since a priori there was nothing special about 750 GeV). Therefore you have to factor in a Look Elsewhere Effect, which basically says, yes, this only happens once out of every 3140 times, but you tried a lot of different times by looking for excesses at different masses, so slow your roll. Taking the Look Elsewhere Effect into account correctly is difficult, since an excess at 750 GeV overlaps with an excess at 745 GeV (for example), and you have to figure out by how much these overlap and therefore how many times you’ve looked for new physics in some sense. The correct way is to do mock experiments by creating fake data (I refer to this as “pseudoexperiments”), inject a fake signal, and see how much it overlaps in different masses. But that takes a lot of computing to do right.

But, after including this, ATLAS sees a 2.0 sigma “global” (rather than “local”) excess. That’s 1 in 22.

So, exciting, but nothing to write home about.

But, CMS also released data on the same day, including their own version of the same search. They had less data, for various reasons, but they ALSO see something near 750 GeV. They see an excess in the diphoton invariant mass. Their significance is $2.6\sigma$ local (1 in 110 or so) or $1.2\sigma$ global (1 in 4). That’s really nothing at all to write home about, other than the fact that it is in the same place as the ATLAS anomaly. Quick and dirty estimates might say that the combination of the two experimental results should lead to an excess that has a local statistical significance of

\[ \sqrt{(3.6\sigma)^2+(2.6\sigma)^2} = 4.4\sigma. \]

However that’s an incredibly rough estimate.

So what I decided to do in this paper is just take all the available data: the ATLAS result and the CMS result, and throw in the two similar searches from the experiments during the Run-I data-taking (which didn’t see anything), and see what I could come up with.

I was pretty explicitly not engaging in any model-building. I wasn’t trying to figure out what sort of particle would be producing the excess, or how it would fit into any bigger model, or even what other searches we should be looking in. I just wanted to answer some questions for myself to decide how seriously I need to take this anomaly. After doing a fair amount of work, I decided to share it with other people, in case they found it useful.

I should note I’m not the first to address these problems. Some of the very first papers on the anomaly obviously covered very similar ground, in particular I’ll point out these three: (1)(2)(3). My work should be thought of as complementary (not to mention a very late arrival). The main advantage I had was time: I could do a bit more computationally intensive fits, floating backgrounds, and some other things. In general, I agree with the results that these other papers found (and again, they were out in the 1st week, I took a month). I think I add a few things to the discussion, so I hope those authors aren’t annoyed at me.

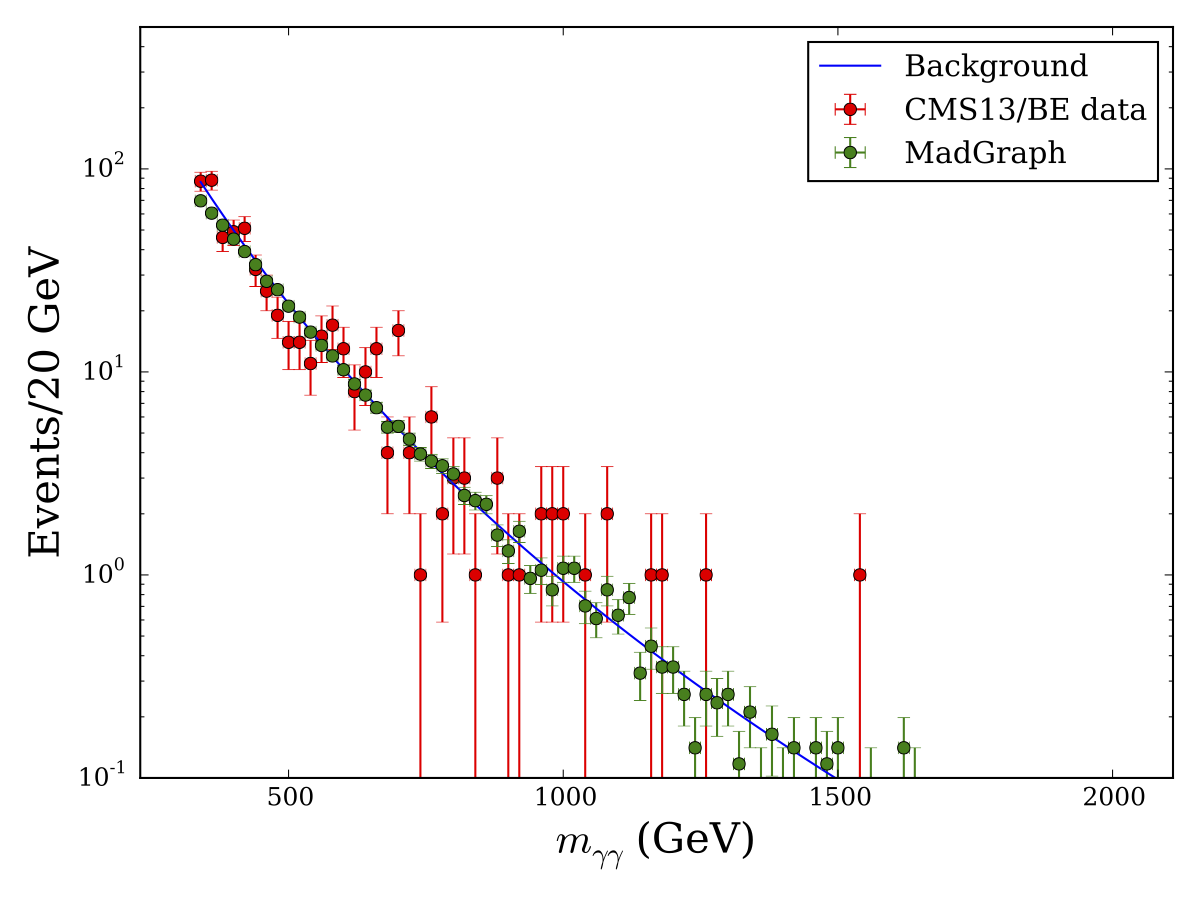

So, first up, I want to know how well the various experiments agree. I had four data sets to work with: I called them ATLAS13 (the 13 TeV Run-II diphoton analysis from ATLAS), CMS13 (Run-II diphotons from CMS), ATLAS8 (8 TeV Run-I diphoton analysis from ATLAS), and CMS8 (I think you get the naming scheme by now). The first thing I did was fire up a useful program called GraphClick and digitize the data straight from the experiments. The results look like this (red markers on the plots, and click the figure to see the next):

There are 5 plots here. This is because CMS13 has two separate searches that are combined. One where both photons ends up in the “barrel” of the detector (the long cylinder that makes up the middle part of CMS), and one where one photon ends up in one of the “endcaps” of the detector. CMS13 treated these differently, so I must as well.

ATLAS and CMS also gave functions that they think give a good representation of the Standard Model background: the distribution of diphoton $m_{\gamma\gamma}$ which don’t come from new physics. These functions depend on a number of free parameters, so I found the best-fit values for those functional parameters fitting only to background. The results are the blue lines in the plot. (All of this is being done in Python, using the packages numpy and scipy. I’m loving Python right now, even though I learned to program in Fortran77 and thus program like an idiot. Python also isn’t terribly fast, and I spent no time trying to optimize my code, so towards the end the time it took to finish analyses really started to be a problem and limited some things I could have done but didn’t want to wait the week to get the result on).

Next I used a program called MadGraph to generate a huge number of simulated Standard Model events which had two photons in a simulated version of the ATLAS or CMS detector (again at both 13 and 8 TeV). In a bit, I’m going to start generating simulated versions of the new physics which could be making up these anomalies, so step 1 is to make sure that I can imitate the analysis for Standard Model processes. Those simulated events are then piped through a simulated detector, and the results are the green markers on the plots. The point is that largely, the simulated background matches up with the observation without much fiddling on my part. Great, that means I can simulated new things.

As an aside, the program MadGraph is an incredible tool for people in my line of work. It’s a general purpose event simulator that allows you to generate a massive range of possible new physics, on the fly. So with MadGraph in my toolkit, the first thing I could do was start futzing around with new physics by generating events, rather than having to build something up from scratch. MadGraph can’t do everything, but it can do a lot, and makes a lot of our work much easier. The authors (all Ph.D.-owning physicists) don’t get nearly enough credit for their invaluable service to the community.

OK, so simulation checks out. I then picked the basic models I wanted to investigate. As I said, I didn’t want to get into the model-building weeds, so I didn’t get fancy. If a new particle is decaying to two photons with an invariant mass of 750 GeV, then that particle should also have a mass of 750 GeV. There are of course clever ways around this, but that’s more model building than I wanted to get into, and it’s already been covered in the literature on this anomaly. If the final states are two spin-1 photons, then the particle must be either spin-0 (a “scalar” or “pseudoscalar”) or spin-2 (a heavy partner of a graviton, usually called a Kaluza-Klein graviton). There are ways around this as well, but it’s been talked about already in the literature. Finally, the particle must be produced by smashing protons together, so it must interact with something in the proton. The simplest guesses are that it interacts with either the gluons, the light quarks (up and down), the 2nd generation quarks (charm and strange), or the bottom quark. Once I pick one of these options, I can tell you how many particles should have been produced at the 8 TeV run of the LHC, compared to the 13 TeV (its about 1/4.5 for gluons, $c/s$ or $b$ quarks, and 1/3 for $u/d$ quarks). There are ways around this, but I’m sure you guessed that already. Never tell theorists what we can’t do.

Finally, I needed to specify the width $\Gamma$ of the particle. A particle has a mass $m$, which means that the photons coming from the decay should have an invariant mass equal to $m$. But by Heisenberg’s uncertainty principle, you can’t know the energy of something exactly unless you have an infinite time to measure it. Since this particle decays into photons, you can’t measure it’s mass (its rest energy) over an infinite time. So the mass gets smeared out a bit. Instead of always measuring $m$, you’d get a “width,” sometimes a bit more than $m$, sometimes less. The size of this width is inversely proportional to the lifetime of the particle. Large $\Gamma$ means the particle decays quickly.

We would normally expect that the width of the particle is much less than the mass, in which case the error in the measurement of the invariant mass of the diphotons would come from the fact that ATLAS and CMS aren’t perfect detectors, they get things wrong slightly. That is, you’d be detector limited. ATLAS13 claims evidence for a better fit to the data if the new particle (assuming there is a new particle, of course) is “wide,” with a width of 45 GeV. That’s shockingly big, if true, and a LOT of the theory papers written about this anomaly have been assuming its true and wrestling with the implications. Much of this paper’s motivation was just trying to find out for myself how much evidence was there, one way or the other.

Really I should scan over the width, going from detector-limited narrow width to over 45 GeV, but I don’t have the computational set up to do that justice, so I picked just the narrow width and the 45 GeV width.

For each option: chose a mass (between 700 and 800 GeV), chose a spin, chose a width, chose a coupling, I generated signal events using MadGraph, and ran them through simulations of all 4 experimental setups. I then did a statistical fit to the data, asking for each experiment what was the preferred amount of this particular signal, which added to the background looked most like the observed data. In each case, I floated those background parameters I mentioned, allowing the amount of background to slide around in response to the amount of signal in order to get the best possible fit.

So that took a while, because then I started asking how much of a particular mass/spin/coupling/width option could be fit in combinations of data. Say, what’s the preferred amount of signal if I want the best fit to both CMS13 and ATLAS13, and both have to have the same particle in them (since physics doesn’t change between Switzerland and France, I hope). Or, how can I get the best fit to all four experiments.

I’ll show the best fit first, which isn’t how I did it in the paper. The best fit to ALL data turns out to be a 750 GeV particle, coupled to gluons, with spin-0, either narrow or wide (I’ll return to that in a bit). Here’s what those fits look like, zoomed into the region around the anomaly. The red markers are data, the red lines are the shape of the signal from new physics (solid is the narrow particle, dashed is the wide), the blue line is the best-fit background without signal, and black is the background plus signal (again solid = narrow, dashed = wide). One thing to note is that the best fit is way under the number of events seen by ATLAS.

Why is that? Well, what ATLAS is seeing is not really compatible with the results from the other experiments. For simplicity, I’m going to show the results only for spin-0 particles coupled to gluons (all the other plots are in the paper, if you want to see). First, let me show you what cross section (see here) each experiment prefers. That is these two plots, one for narrow, one for wide.

In this plot, the mass is the horizontal axis, and the cross section is the vertical. The red regions are the preferred regions for ATLAS13 (solid red is 68% confidence, light red 90%). The blue regions are the preferred regions for CMS13 (again dark is 68%, light 90%). The dotted black lines at the bottom are the 68% and 90% EXCLUSION regions from the combination of the 8 TeV results of CMS and ATLAS. That is, given that Run-I didn’t see anything, most of the time you’d think you’d exclude everything above those dashed lines (the cross sections are all scaled to 13 TeV numbers, which is where the assumption about gluon coupling comes in).

If I combine the ATLAS and CMS 13 TeV data, I get the dashed black regions. So there is a set of values that does a decent job of fitting both experiments. With cross section about half-way between the individual results, as you’d expect. If I throw in the 8 TeV data, the combination best fit is the solid black curves.

Now, that just tells you what the best fit values are, but not how good of a fit each is. So that’s these sets of plots. Here I’m showing the statistical preference (in $\sigma$) of the indicated data set to have some amount of signal in it with the given mass and width (how much signal? that’s what the previous plots tell you).

So I find that the ATLAS13 data prefers a signal of new physics at about $3.2\sigma$. The real analysis found $3.6\sigma$, so I’m pretty happy with that, as I’m working off of far less information than they are. Also however, my errors are statistical: based only on number of events. Real experimental errors include systematics, the error caused by things going wrong or lack of knowledge. Those systematic errors should make things less likely to look like new physics, so the fact that I’m so close (and underestimate) the significance of this signal is surprising. But it gives me a way to calibrate between the real result and my work: the real $3.6\sigma$ turned into this theory $3.2\sigma$. Instead of $2.6\sigma$ from CMS13, I find only $2.0\sigma$. Note also I don’t use a look elsewhere effect. This is because to figure out the value correctly would require a lot of simulations over all four experiments, and it was just too much to for my computers to bear. Also, one could argue that we only now are interested in the anomaly at 750 GeV, not any other, so we don’t need this effect anymore. Statistics will tell you the answer to questions, but not which questions to ask.

Now, here’s where things are interesting. When I combine ATLAS13 and CMS13, for the narrow width, the statistical preference goes UP. That is, the combination of the data likes having signal in it more than the ATLAS13 data did alone. That’s good. But it didn’t go up as much as I might naively imagine. That’s because the cross section preferred by ATLAS13 is far larger than that preferred by CMS13. So when you fit both together, no one experiment’s data is really happy with the fit, but on aggregate the overall preference goes up. Now, if I combine that ATLAS13 and CMS13 and assume the particle is wide, the significance goes down (compared to ATLAS13). That’s because CMS just doesn’t see a wide particle, you can see this by eye in the fits I showed earlier: a wide particle sort of “overflows” into bins of $m_{\gamma\gamma}$ for CMS13 that don’t have a lot of extra events in them. So that’s really interesting: the 13 TeV data likes a narrow fit, but not so much a wide fit, and isn’t really comfortable with the number of events ATLAS is seeing.

Now throw in the 8 TeV data. For the narrow fit, things stay right where they are, statistics-wise. Just as happy with the fit now as you were before (though the best fit cross section drops a bit). For the wide fit, the overall statistical fit goes up, to pretty much exactly where the narrow fit is. What this is saying is that the 8 TeV data — which remember doesn’t see anything unusual — can more readily accommodate a wide signal than a narrow. Basically, in a wide resonance, the events get spread around more into more bins, so its easier to hide an excess in the 8 TeV data.

So, this is really interesting. If you believe the 13 TeV data, you sort of prefer a narrow particle. If you believe all the data equally, you are just as happy with a wide particle. But one other thing comes out of this: someone is seeing the wrong number of events, if this is really new physics. The cross section for ATLAS13 is way too high to be easily compatible with the CMS13 result, and would be really hard to hide in the 8 TeV data sets. So, IF this is new physics, it is likely that ATLAS got sort of lucky and saw more events (just a handful) than it “should have.”

Of course, if they hadn’t gotten “lucky” the statistical signal would not have been nearly $3.6\sigma$, and none of these papers on the diphoton anomaly would have been written. So, if you believe it is new physics, you can appeal to the Anthropic Principle and say ATLAS getting a statistically unlikely upward fluctuation was a necessary condition for you to be reading this blog post. If it hadn’t happened, there wouldn’t be over 150 papers on this on arXiv, I wouldn’t have spent Christmas coding, and I wouldn’t have written this for you to read it.

Or you can just say that it’s all just a statistical fluctuation and there’s nothing interesting here. After all, we’ve now all agreed that someone’s data is “wrong,” we’re just arguing over how much.

One last test. I want to know how much I can trust the width arguments. So I ask the question “if I had a narrow particle, how often would I see it in the data as looking wide? Or, if I had a wide particle, how often would I think it was narrow from the data?” To answer this, I generated 2000 fake versions of each experimental data set, adding in a narrow or wide signal (I used the best fit scalar particle here, since scanning over all possibilities would have been impossible given my computational limitations). I then fit each pseudoexperiment to both a narrow and wide signal template.

Now, most of the time, a narrow signal will be better fit by the narrow template. And most of the time the wide signal will be better fit by the wide template. But sometimes, by random chance, that won’t happen. And maybe that’s happening in the data we took at the LHC. So, by doing this many times, I can get an idea of how often each hypothesis could “look like” the other.

The results are here. These are histograms of the 2000 pseudoexperiments. Blue is narrow signal events, red is wide. The more to the left you look, that means those particular pseudoexperiments “looked” narrow. The more to the right, the wider the experiment looked. The black vertical line is the result we measured. If I only take 13 TeV data, I get the plot on the left: that looks more narrow than wide. If I take all data, I get the plot on the right: that looks more wide than narrow.

I can then calculate the Bayes factor, which is just the ratio of the histograms at the vertical line. The 13 TeV data prefers narrow widths to wide with a Bayes factor of about 1.5-2. All data prefers wide to narrow with… a Bayes factor of about 1.5-2. These are very very mild preferences in the data, so I would conclude that theorists are justified in considering narrow or wide particles in our models, but by no means can you say the data DEMANDS a particular width. We just don’t have enough information yet.

So that’s pretty much everything I know now about the anomaly at 750 GeV. And now you know it too. It’s nothing too certain, but I expected that going in. $3.6\sigma$ and $2.6\sigma$ is just not that much significance to start with, so any question I ask would have conflicting and uncertain results, with at best only minor preferences for any particular result. But I internalized a lot about the experimental results by forcing myself to grind through the data, and once you’ve done that much work it seemed silly not to write a paper about it.