Paper Explainer: Mapping Dark Matter Through the Dust of the Milky Way Part I

/This is work that I did with my student, Eric Putney, then-Rutgers-postdoc Sung Hak Lim (now at the Institute for Basic Science in Daejeon), and my colleague David Shih. This is actually a continuation of work we’ve been doing for a while, starting with a paper that tested the idea on synthetic data, and then a later paper applying it to real data. I didn’t write blog posts on those because I fell behind on everything starting in 2020 and I’m only just now digging myself out. This new paper is one of a pair, Part II will be coming out in the new year.

So what’s the big idea, and what are we doing now?

In short, we have a new method that takes the motion of stars in the Milky Way and learns the gravitational potential of all the stars and gas and dark matter in the Galaxy. It does this even in the regions where we can’t see most of the stars, due to dust obscuring their light, which is the new development above and beyond the previous work. This paper is about the method, and the next paper will give the results for the gravitational potential and the dark matter density we can learn from it.

As I’ve written about before, if you want to study dark matter, you probably want to know where the dark matter is. In our own home, dark matter is the dominant component of the Milky Way galaxy, though towards the center of the Galaxy stars and gas out-mass it (the supermassive black hole at the center of the Galaxy is not a significant contributor of mass to the entire Galaxy. There is more mass in stars within a few tens of lightyears around that black hole than in the black hole itself). But even though locally the normal matter (“baryons” in the parlance of astrophysics) out-weigh dark matter, knowing how the dark matter is distributed around the Galactic disk and towards the Galactic center could tell us things about the particle physics of dark matter.

So that’s my underlying motivation. But how do we measure invisible dark matter? As always, we use gravity: we look for the pull of dark matter on the visible matter.

In this case, we’ll look at stars. When thinking about machine learning in physics (or really, machine learning for anything) I think it is always best to start with the data. Regardless of the hype about machine learning (or, even worse, the hype about “artificial intelligence”) what we can do and what we can learn from a machine learning algorithm as applied to data is always going to depend on the data itself.

In this project, we are working with the data provided by the Gaia Space Telescope. Gaia is a space-based telescope operated by the European Space Agency (ESA) that measures the positions of the brightest stars in the sky, all 1.5 billion of them (it also measures some stars in other galaxies and some quasars which are supermassive black holes from the other side of the observable Universe). That sounds like a lot, but remember there are on the order of 200 billion stars in the Milky Way. So this is only a small fraction of all that is out there.

Gaia’s telescopes are not a particularly big, meaning it can’t see super dim objects. But what it can do is measure a lot of objects, over and over and over again. It can look many times at all billion+ stars visible to it, and each time it measures where on the sky those stars are, with extremely high precision. That repeated measurement and that precision allows Gaia to do something amazing: it can see stars move.

Motion is space is really hard to capture. Everything in space is moving: the Sun is moving at 220 km/s through the Milky Way, for example. But space is, in case you hadn’t heard, big. So big that even though the Sun and all the stars are moving much faster than any spacecraft we have ever launched, the relative locations between stars just don’t change much on human timescales and so typically we can’t measure the motion from the distance change. As a result, typically the only component of velocity we can measure for stars is the component towards or away from the Earth, measured through the redshift or blueshift of atomic spectral lines using an instrument called a spectrometer.

Spectrometers only measures motion along the line-of-sight, but Gaia can measure the motion on the plane of the sky (transverse motion). Technically, it measures the angular motion (the change in the direction of the apparent line of sight to a star). But Gaia’s precision means Gaia can also measure parallax, the change in the apparent location of a star as the Earth (and Gaia) orbits the Sun. To see parallax, extend your arm and put your thumb out. Then close one eye and then the other. The apparent location of your thumb will “jump” relative to more distant objects, because as you switch eyes you switch the observation point. With both eyes open, your brain multiplexes the two images, and the small changes in the two lines of sight due to parallax is one of the key ways that your brain builds a sense of distance. Screwing with that parallax information easily results in your brain being fooled about how far away or how big objects are (this is what forced perspective filming like in Lord of the Rings does, or the failure mode when people are looking at objects high in the air and can’t tell how big they are).

Similarly, a star will appear to be in different locations when measured in December versus where it appears in July, because the Earth is in two different places at those times. The closer the star, the bigger the apparent change, and conversely distant stars have very small angular changes over the year and so can’t have their distances measured using parallax. Gaia is sensitive enough that it can make parallax distance measurements out to ~10,000 parsecs (10 kpc, or around 33,00 lightyears). This is around the distance between the Galactic center and the Sun, or from the Sun to the closer edge of the stellar disk of the Milky Way.

As an aside, the distance measure “parsec” (around 3.26 lightyears) originates from parallax measurements. A parsec is the distance at which a star will appear to jump by 1 arcsecond (one “parallax second of arc” or “parsec”), where an arcsecond is 1/3600 of a degree. The nearest star, Alpha Centauri, is around 1.3 parsecs away, so the maximum parallax angle is sub-arcsecond. The human eye is physically incapable of resolving such small angles, and so the naked-eye astronomers were not able to measure parallax. The ancient Greeks actually knew that if the Earth moved around the Sun, there should be a parallax angle. They failed to measure any, and so concluded that the Earth wasn’t orbiting the Sun (since they had no conception of how far away the stars are, and thus how small the angle they were looking for was). Fundamentally, the lack of depth perception in space is big problem in astronomy, and we have to work hard to get that information.

Thanks to Gaia, we can build a catalogue of the positions (distance and angular location on the sky) and velocities on the plane of the sky (how the star is moving transverse to us). Gaia is also capable of doing some crude spectroscopy, and so for a selected subset of stars, Gaia can also tell us the line-of-sight motion. There are only a few ten million such stars measured by Gaia (out of 1.5 billion stars total), but for such stars we have the full position (where the star is in the Galaxy) and velocity (how the star is moving in the Galaxy) information. Gaia is also complete: if a star could have been measured by Gaia, it was measured by Gaia. That will be key in what we are going to do.

OK, so we have our dataset: several ten million stars around the Earth with their full position and velocity information (along with some other stellar properties, such as their brightness and their color). What can we do with that?

Think of the stars as tracers — their motion is telling us about the gravitational field they are moving in. I’m not going to follow any individual star, but rather I’m going to treat all the stars in the Galaxy as an ensemble: a group whose statistical behavior tells us something interesting. In some sense, I’m treating the stars like air molecules. You don’t need to know much about where any individual air molecule is, you want to know about the bulk properties of all the air molecules to know about the room in which the air is sitting.

For a bunch of stars sitting in a gravitational potential field such as the Galaxy (and remember, not only do those stars experience the gravity of the Galaxy, they contribute to it because they themselves collectively have enormous mass) there will be regions where the gravitational pull is stronger (because there’s more stuff), and stars will tend to fall in towards that region. But those stars will also fly through that and out the other side, because on the scales of galaxies stars are tiny and thus don’t collide with each other very often. The faster a star is moving to start, the further out it can move from the high-gravity regions before it slows, stops, and turns around. We’re never going to be able to see a single star complete that kind of orbit (it takes tens and hundreds of millions of years), but if we think of a population of stars, there will be some populating different parts of similar orbits.

So there is some relation between a region of high gravity (due to having a lot of mass) and stars “wanting” to pool in that region, and move faster in that region, while also slowing down as they move out of that region. Let’s try to formalize that idea.

What we want to think about is the phase space density of stars. Phase space is the abstract “space” of coordinates that can completely describe the motion of a particle. In this case, that space is six-dimensional: three coordinates of position (where you are in $x$, $y$, and $z$ coordinates, for example) and three for velocity (how fast you are moving in each coordinate axis). There are other ways to break down the coordinates of phase space, but there will always be six coordinates. If you have a single “particle” (a star, in this case) going through an orbit, it will be moving through phase space, speeding up, slowing down, changing direction and position.

A phase space density is the probability of finding a star in particular position and velocity within phase space. If you have a collection of stars, you can imagine that all the stars are “drawn” from the phase space density: on different orbits, in different positions, moving in different ways. I’ll call that phase space density $f$. It is a function of position ${\bf x}$, velocity ${\bf v}$, and time $t$.

I’m now going to make a strong assumption. I’m going to assume the Milky Way is in equilibrium: that the collection of stars and dark matter and gas and such has reached a steady state configuration where on average nothing big is changing significantly. Now, we know this isn’t true. For one thing, the spirals of the Milky Way are regions where stars are being formed. Such regions have young stars, some of which have high mass and are thus burning through their nuclear fuel at astounding rates, this makes them hot and bright. Hot stars are blue, so the spirals in spiral galaxies are notably blue. Those stars burn through their fuel so quickly that they die rapidly (as stars goes, still million of years, but compare to the 10 billion year lifetime of our own Sun). There are small, cool red stars being formed in the spirals too, but they’re dim and thus outshone by their bright blue siblings. Regions which are no longer forming new stars lack the quick-burning blue stars and are said to be “red and dead.”

So spirals are disequilibrium behavior, but they are disequilibrium primarily in the young stars. If we selected a population of stars that was mostly old, then we wouldn’t see the spirals. But there are other forms of disequilibrium. For example, galaxies form by the merger of smaller collections of dark matter and stars. The Milky Way is in the “middle” of a merger with the Large and Small Magellanic Clouds (LMC and SMC). This isn’t going to happen for many hundreds of millions of years, but the LMC is already close enough that its gravity is pulling the Milky Way enough to warp our disk of stars. There is evidence of earlier mergers which are still being “digested” by the Milky Way, with regions of stellar phase space suggestive of a population of stars that being mixed into the rest of the native Milky Way stars (sort of like pouring milk into coffee: there’s a period of time when the milk is sort of mixed but not completely, and can be seen in the “phase space” of the fluid).

So there definitely is disequilibrium in the Milky Way. But we sort of expect it to be small, or at least small enough we can ignore it for now. One thing we are excited about in this work is that we see some paths forward to address this assumption, but that’s future work and for now I’ll just say we’ll make the same assumption everyone else doing this makes and say that the Galaxy is in equilibrium.

What that means is that the phase space density shouldn’t change with time. Mathematically, that means that the derivative of $f$ with respect to time $t$ should be zero: \[ \frac{df(t,{\bf x},{\bf v})}{dt} = 0. \] But, when I take that derivative, there are many possible ways that $f$ could be changing with time. It could just directly changing with time: stars are being born, or stars are dying. We write the rate of change of such a direct dependence on time as $\partial f/\partial t$, where $\partial$ is said as “partial.”

We could also have changes in $f$ because $f$ changes with $\vec{x}$ and ${\bf x}$ changes with time, or $f$ changes as you move around in ${\bf v}$ and ${\bf v}$ changes with time. Putting all that together we get this: \[ \frac{\partial f}{\partial t} + \frac{\partial {\bf x}}{\partial t}\cdot \frac{\partial f}{\partial {\bf x}} + \frac{\partial {\bf v}}{\partial t}\cdot \frac{\partial f}{\partial {\bf v}} = 0 \] But time-changes in ${\bf x}$ are velocities ${\bf v}$. And changes in velocities are accelerations ${\bf a}$, which Newton taught us are related to forces. In this case, the acceleration is caused by gravity, which we can write as a change (with respect to position) of a gravitational potential $\Phi$. Putting that all together and making some mathematical transformations we get the collisionless Boltzmann equation: \[ \frac{\partial f}{\partial t} + {\bf v}\cdot \frac{\partial f}{\partial {\bf x}} + {\bf a}\cdot \frac{\partial f}{\partial {\bf v}} = 0 \] where ${\bf a}$ the gravitational acceleration is ${\bf a} = - {\mathbf \nabla}\Phi$, the gradient of the potential. Under the assumption of equilibrium, the first term, $\partial f/\partial t$, is itself zero.

If you didn’t follow the math, don’t panic. All I’m saying here is that the distribution of stars in position and velocity obeys a particular equation that involves the force of gravity. If I want to somehow invert that equation calculate that force of gravity, I need to know how the density of stars changes as you look at different positions and different velocities.

And here we come to the big problem. How do you calculate those changes in phase space density? We have million stars to play with, which you’d like to say is a lot. However, phase space density is six-dimensional, and so you run into what’s called “the curse of dimensionality.”

Imagine you only had to tell me the distribution of stars in one dimension (say “distance from Earth”). You can imagine measuring every star’s distance and then making a histogram: just make “bins” of different distance ranges and put a star in the bin that corresponds to the range in which the star sits. With millions of stars you could have thousands of bins and still have thousands of stars in each bin. You could then get the derivative of the density with respect to distance by just numerically taking the difference between neighboring bins and dividing by the distance between the bin centers. That’s all a derivative is, at its core.

But now consider two dimensions that you want to bin stars in. You have millions of stars, and you take a thousand bins in each dimension. A thousand times a thousand is a million bins. So now each bin has only a handful of stars each. So when you take the difference between neighboring bins, you can get numerical errors between what you measured and what the “right” answer should be simply because there aren’t enough stars to really be sure the sample you have is the statistically expected result. Three dimensions means that you have (in our simple example) $1000 \times 1000 \times 1000 = 1,000,000,000$ bins, far more than you have stars. Most bins will be empty, and the ones that contain a lone star will be completely dominated by statistical errors. Six dimensions, like the real problem? Yikes. Not nearly enough data to get a non-noisy estimate for the phase space density by “binning” the phase space.

One solution would be to just bin phase space into fewer bins. But now the separation between the bin centers are larger and the derivatives less accurate for that reason. Traditionally, the solution is to take somewhat fewer bins and also integrate the Bolzmann equation over velocities to create the Jeans Equation (named after James Jeans, not the denim). But even then you need to make a bunch of assumptions about the symmetry of the Galaxy to get enough stars in each bin to not be completely dominated by statistical errors.

We would like a better way.

This is where machine learning (finally) comes in. There are a class of machine learning algorithms called normalizing flows. The idea here is that you want to learn the probability distribution of a set of data. You start with a known function (multidimensional Gaussians are the easiest choice) and a neural network that can smoothly deform that initial distribution into some final distribution. You guess that this distribution is the probability distribution of the data. This is a terrible guess, but you can quantify the likelihood that the data was drawn from the guessed distribution (for the physicists, this likelihood is the entropy of the data, which is a very interesting connection). Now figure out how to change your neural network to make a new guessed probability distribution which makes likelihood a bit better (this is the magic of machine learning, backpropagation). Keep doing that. Eventually, you’ll get a neural network that transforms your initial (bad) guess into a pretty good approximation of the probability distribution from which your data was drawn. Without knowing what the right answer is. That is, this is unsupervised machine learning. Above, I show the normalization flow transformation between the Gaussian distribution and the Gaia data we use in this work (video from my student Eric Putney).

But the probability distribution of stars is just what I was calling the phase space density $f$. And even better, the same backpropagation that allows us to train the network allows us to directly calculate the derivatives with respect to position and velocity that we need for the Boltzmann Equation. So machine learning gives us a way to get an estimate of the phase space distribution and its derivatives. And it turns out that this estimate is better than you would get using traditional techniques for a given amount of data.

The stars with full velocity measurement within 4 kpc of the Sun, as seen by Gaia. The horizontal axis is “color” (red to the right, blue to the left). Vertical axis is intrinsic magnitude, brighter stars at the top. Below the horizontal dashed line, the stars are too dim to be seen by gaia if they are at the edge of the 4 kpc volume. The white square selects stars in the Red Clump.

This idea was first proposed by others. In our first paper on the topic, we showed that we could do this using simulated Gaia data created from a simulated galaxy that was similar to the Milky Way. Using a form of normalizing flow known as a Masked Autoregressive Flow (MAF), we showed we could learn the phase space density of stars with realistic measurement errors (and propagate those errors into appropriate uncertainties in our final answer) to learn the accelerations of the stars. Taking another numerical derivative of the accelerations with respect to position, we can learn the mass density $\rho$, using the Poisson Equation: \[ 4\pi G \rho = \nabla^2 \Phi. \]

Mass density measured below and above the solar location, with the average dark matter mass density in the lower panel. From our previous work.

We then were the first to use this technique on the real Gaia data. Starting with 24 million stars with measured positions and full velocities in the Gaia dataset, we first selected a “complete” sample within 4 kpc of the Sun. These stars are stars that are intrinsically bright enough so that wherever they fell in the giant sphere (of radius 4 kpc, or 13,000 lightyears) centered on the Sun, we could see them. Without this selection criteria, we would think that there are more stars near the Sun then elsewhere, simply because we’d be seeing dim stars nearby, but not the equivalent dim stars far away (this is related to what astronomers call Malmquist bias). After this selection, we have 5,811,956 bright stars with all positions and velocity components measured by Gaia. About 66% of them lie in a particular region in the evolution of stars: the “Red Clump” which is a phase of stellar evolution when helium burning has started in the core. These are old stars, which means there’s a decent chance that our equilibrium assumption is at least vaguely true. Good.

In our first paper with real data, we then fit normalizing flows, then took the derivatives, numerically solved for an acceleration at every location, and then took numerical derivatives of accelerations to get density measurements. From this, we could, for example, estimate the density of stars+dark matter along an arc starting below the Galactic disk, moving through the Solar location, and ending high above the disk. Subtract out the mass density of stars and gas, and ta-da, you have the dark matter density. All this without making the same symmetry assumptions that typically are needed for Jeans-type analyses.

However, there were a few problems. The first is that our density calculation was, in the technical terminology, “slow as goddamn hell.” Numerically it just took a ridiculous amount of time, so we were limited in where we had the computing power to actually evaluate the result. So while we could in principle calculate a density anywhere within 4 kpc of the Sun, in practice we could only show a few places.

The second is a more physics-motivated problem. Real gravitational accelerations come from the change in a potential: ${\bf a} = -{\mathbf \nabla}\Phi$. This means that there is a function ($\Phi$) whose slope in different directions tells you the acceleration. We didn’t do that. We just found three numbers (the components of the vector ${\bf a}$) that best satisfied the Boltzmann equation. Not only is $\Phi$ itself interesting, but vectors that come from the gradient of a function have other mathematical properties that our guess for ${\bf a}$ doesn’t have (in particular, real gravitational accelerations are “curl-free” ${\mathbf \nabla} \times {\bf a} = 0$). So our estimates for the accelerations could be non-physical, because we weren’t using all the knowledge we have about what the right answer could possibly look like.

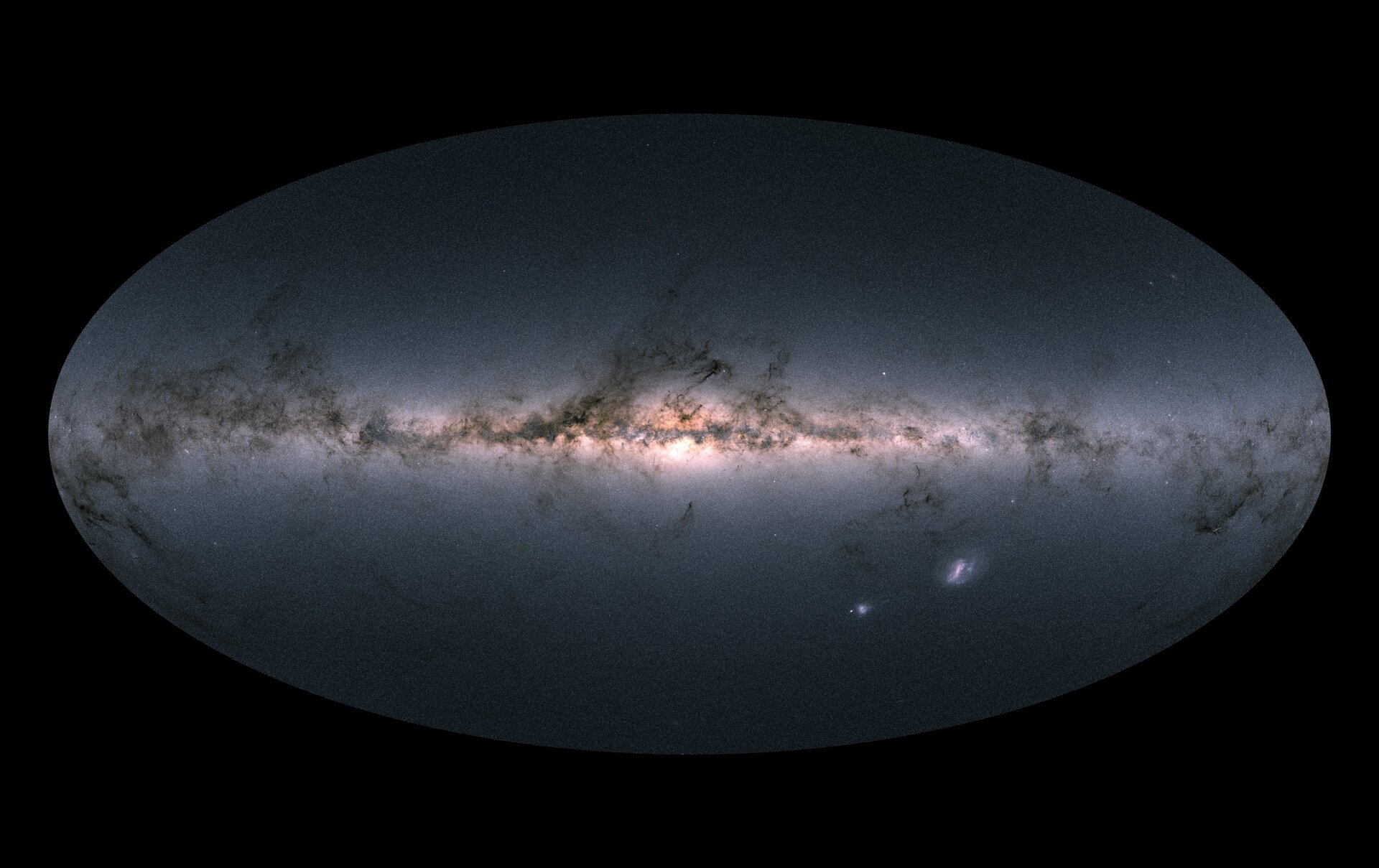

Map of the well-measured bright stars we use in this work, from Gaia data. Known dust features are labeled.

Finally, well, just look at the data itself. See all those dark patches? Those are places on the sky where Gaia doesn’t see many stars. But notice where they are: in the disk of the Galaxy and towards the Galactic Center. Those are not regions where there aren’t many stars. There are in fact a lot of stars there! There’s just also a lot of dust and gas.

Astronomically, dust scatters light, making stars behind clouds of dust redder (for the same reason that the sky is blue and sunsets red, dust preferentially scatters blue light, leaving only the red to be seen by us) and dimmer. Stars that are just bright enough to be seen by Gaia will be dimmed below the sensitivity of the telescope, and disappear from the sample we have. If you’ve seen the Milky Way, you know these dust clouds: you’ve seen them yourself.

Those missing stars means that when we learn the phase space density from data, we’re not learning thing that is satisfying the Boltzmann Equation (and from which we can learn the gravitational acceleration). Instead, we’re learning a combination of the true $f$ and the suppression due to dust. But this observed $f$ has spurious derivatives that give non-physical answers for accelerations and mass density if you use it as “the” $f$ in the Boltzmann Equation.

In our original work, we just avoided this issue by not calculating accelerations in the disk or towards the Galactic Center. That is, we just didn’t look where there was lots of dust. But that’s bad for two reasons: first, just because we didn’t think there’s dust in a particular location, doesn’t mean there’s no dust. Second, we’d really like to know what the gravitational potential is in the disk and towards the Galactic center, because those are interesting regions from the point of view of dark matter. So, what to do.

My colleague David Shih came up with a very clever solution. Frankly, I didn’t think it was possibly going to work, but live and learn.

The idea is that we know we have dust and we know that the dust is going to make it so we see fewer stars. That is, there is some efficiency function $\epsilon$ that is reducing the real phase space density $f$, the one that satisfies the Boltzmann Equation, to an observed $f_{\rm obs}$, which is reflected in our dataset but doesn’t have any relationship like the Boltzmann Equation. Let’s assume that this dust-related extinction function depends only on position, not the velocity of the stars (this should seem reasonable, but its a bit of a subtle argument as to why it might be true. We think it is in our data, but see the Appendix of the paper for more). We can then say that, if we knew $\epsilon({\bf x})$ and $f_{\rm obs}({\bf x},{\bf v})$, we could calculate a “corrected” phase space distribution $f_{\rm corr}$ satisfying the following expression: \[ f_{\rm corr}({\bf x},{\bf v}) = f_{\rm obs}({\bf x},{\bf v})/\epsilon({\bf x}). \] If these assumptions are true, $f_{\rm corr}$ obeys the Boltzmann Equation.

Indeed, you can put this expression into the Boltzmann Equation and get a messy expression that relates both $\Phi$ and $\epsilon$ to the derivatives of $f_{\rm obs}$: \[ {\bf v}\cdot{\mathbf \nabla} \ln f_{\rm obs} - {\bf v}\cdot{\mathbf \nabla} \ln \epsilon ({\bf x}) + {\mathbf \nabla}\Phi({\bf x})\cdot \frac{\partial \ln f_{\rm obs}}{\partial {\bf v}} = 0. \]

How does this help? Well, $\epsilon$ and $\Phi$ are just functions of position. Unknown functions, but functions. We have a way to determine unknown functions from data, its called machine learning. We take our normalizing flows, trained on data as before, and those provide the derivatives of $f_{\rm obs}$. We then create two neural networks, one representing $\epsilon$ and the other $\Phi$. We train those two networks across the positions inside our 4 kpc sphere where we have Gaia data, finding the neural networks that give function outputs that minimize the Boltzmann Equation at each point.

This creates a version of $\epsilon$ that represents the number of stars at a given point that we could have observed but didn’t. Mostly, this is due to dust, though there are other effects: for example, in very dense starfields, Gaia undercounts stars, an effect known as “crowding.” We also get a function $\Phi$, which represents the gravitational potential. It’s derivative is the acceleration, and it has the curl-free physical constraint that it should. It is also much faster to evaluate than our previous method.

This paper describes how we do this machine learning, and looks at the output for the $\epsilon$ network. Part II (coming to theaters near you in 2025) will look at the gravitational potential and its related quantities (acceleration and mass density).

So, what does the $\epsilon$ network tell us? One really simple way to look at these two plots here, which shows the phase space density as learned from data $f_{\rm obs}$ (on the left) compared to the density that is corrected by the removal of dust $f_{\rm corr}$ (on the right). Those dusty patches in the disk are gone, and the smoother phase space density is what the distribution of these old stars would look like (according to the data) if there wasn’t dust in the way.

Another thing we can do is compare our $\epsilon$ with the “right answer” using what astronomers know about dust. To be clear: we’re not astronomers and we don’t know much about dust. But other people do, and they have maps of the sky made using other observations that tell us how much dust should be along different lines of sight. However, those dust maps aren’t our $\epsilon$.

The efficiency function as extrapolated from known dustmaps

The Efficiency function as learned by our network

Our “efficiency function” is a convolution of dust along a line of sight and a population of stars at a particular point. From dust maps, we can estimate an equivalent efficiency function, but only if the stars that we’re using were visible in Gaia. Recall that we threw out dim stars when building our dataset. Well, many of those dim stars are bright stars that were dimmed by dust. For stars that aren’t near the edge of our 4 kpc sphere, we can recover what $\epsilon$ should be, from the data directly. Using a particular dustmap “L22” we built a data-driven (not machine-learned) “$\epsilon_{L22}$ function that should be good up to a around 2 kpc from the Sun: beyond that too many stars will be too dim to be included in the Gaia dataset and our way of estimating $\epsilon_{L22}$ will miss them.

But you can see from comparing the machine-learned no-dust-knowledge-needed $\epsilon$ to $\epsilon_{L22}$, we are recovering the known dusty features in the regions where we expect the two to agree (close to the Sun). There are still clearly some problems in our machine-learned solution in the disk and directly towards the Galactic Center, where the dust was heaviest and the data most sparse, but if we look at the error budget from our results we see that the network is also less confident about its results there, which is as it should be. The result is bad, but we know it is bad.

So, in this work we have expanded the region in which we can apply the Boltzmann technique to learn the gravitational field, using a completely new machine learning approach to the problem. In doing so, we have learned from the data directly, the efficiency function of dust obscuring stars in the Galaxy. In our next work, we’ll show the results for the associated gravitational potential and use this machinery to measure the distribution of dark matter within 4 kpc of the Sun. Stay tuned!.